By understanding how filesystems work, you can hopefully gain a better understanding of how you are and are not at risk. Most modern filesystems used for enterprise storage are what is called "journalled" (or also "transactional"). In this article, I'll try to explain the behaviors of these filesystems in order that you might better be able to assess your risk, and therefore be better able to recover in the event that the unthinkable happens - your data goes away. Please note, that I in no way am implying that the behavior described in this article in any way is universal to all filesystems, only that it is my understanding about most modern-day filesystem that I work with.

No filesystem is risk-free. There will always be the potential for dataloss, however most enterprise-level filesystems do a good job of preserving the integrity of your files. The various pieces all work together to ensure that your data is there when you need it, regardless of the circumstances. However, the geniuses (and I mean that) that design these systems can't make your files immune to the possibility that we should all know could happen. The idea is to understand that risk, and make the best choices we can to protect ourselves in the event that it does. You may go your entire career without ever encountering this, but better prepared then wanting.

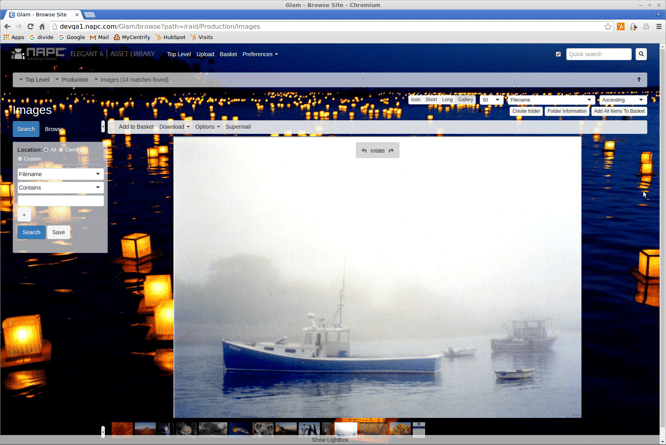

All hierarchical (folder tree) filesystems contain these two components:

Metadata

Data Store

This includes non-journalled filesystems as well as journalled filesystems.

The Metadata generally includes the superblock and the inode, or file table, which is just an index of the file names, where each file starts being stored on the disk, and where in the hierarchy it resides. It may also contain other infomation such as size, integrity references (checksums), or perhaps storage type (binary or text) as well. This information is used for storage and retrieval of these files, as well as information describing particulars about how this particular filesystem is implemented.

The DataStore is simply the empty place where the actual file data is stored on disk. Most filesystems break these into blocks of storage of a pre-determined, fixed size. Fixed-size blocks tend to make retreival and writing much faster, as there's much less math for the filesystem drivers to perform in order to find the place where the file is stored and where it ends. There are some exceptions, and these exceptions are typically very optimized for the way they do variable-sized blocks, so any performance hit you take for "doing the math", you more than make up for in having a filesystem optimized for the type of files you're storing on it.

To retrieve a file, the filesystem looks at the metadata table for the file it's trying to retreive, and then determines where on the filesystem the file starts, and goes and reads that piece. In the process of reading a single file, the filesystem drivers may find that the file is not stored in one contiguous (one after the other) group of blocks. When this happens, it's called 'fragmentation'. No performance filesystem that I know of can totally avoid having some level of fragmentation. As filesystem begin to fill up, with regular file creations and deletions, the filesystem needs to become creative with how it stores these file. Although the ideal situation is to store each file in a contiguous way, it's not always possible without having to rearrange a lot of other allocated blocks in order to get a group of blocks large enough. Trying to do this would make the filesystem incredibly slow whenever you tried to write a file to it, as it would need to perform all sorts of reorginizations any time it wrote a file to big for the available contiguous blocks. Modern disk has improved dramatically in performing these sorts of non-contiguous block reads/writes (known as 'random seeks'), so when a filesystem has a reasonable amount of free space (14% or more), the performance should remain acceptable.

Now to the hard part - writing files. In order to write a file, the filesystem first determines the size of the file being written, and then it tries to find a group of blocks right next to each other to store the entire thing. Storing files in groups of blocks all next to each other (contiguous), means that the mechanical heads reading the physical underlying disk don't have to move much to get to the next block. For obvious reasons, this is much faster and more efficient. If it does find one, it writes the Metadata, telling the Metadata where it's storing the file, then it writes the actual file data to the Data Store. In the event it cannot find a single group of blocks to store the whole file, it will find the optimum blocks in which to distribute the file across. Different filesystems use different means to describe 'optimum', so I won't get into that here. Suffice it to say, some filesystems are better at it than others. As a filesystem begins to get filled up, the drivers have a much more difficult time in storing files, having to break the file up more, and as such having to perform more calculations to get it all right.

What happens when Murphy's Law strikes?

Filesystems breaking, or filesystem corruption as it's known, is most often caused by underlying hardware issues such as the physical disk dying or 'going away', or perhaps a power outage (some filesystems are more fragile than others, and can break through regular use, but that's not typical of true enterprise filesystems). If the disk isn't mounted, or there are no writes happening at the time of the failure, the filesystem is very likely to be unharmed, and a simple repair should get it all back to normal. However most enterprise filesystems are in a constant state of flux, with files getting written, deleted, moved or modified nearly all of the time, so having such a failure is often a reason for concern.

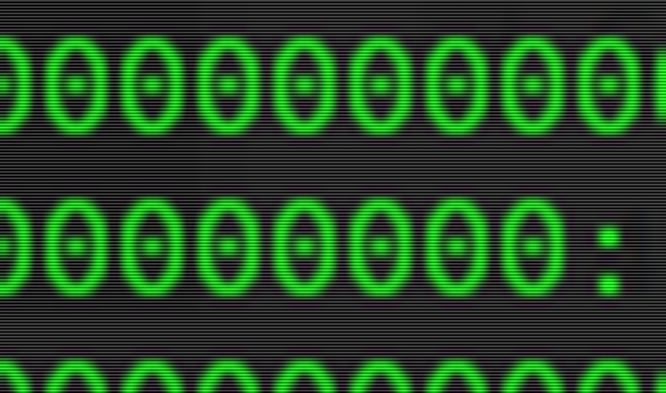

When this failure occurs, any operation that was happening at the time is truncated to the point of the failure. If it was in the middle of writing the file, it means that the entire file not only didn't make it to disk, but parts of the file and file record are likely incomplete, and there's no warning of that other than the broken parts. In a non-journalled filesystem, this means that the damage may not be noticed until someone finds that file that is partially written. When they try to open it, there's any number of possible scenarios. The worst possible scenario is that the storage chain is broken in a really bad way. For instance, the file may start on block number 9434, and then jump from there to block 10471, and then to block 33455, and then back to block 8167. If it died before it had a chance to tell the filesystem all of that, then the filesystem might think it goes from 10471 to 2211, simply because that's the value that was stored for that block prior (or perhaps it's just random data that was there to start with). If 2211 is somewhere within the superblock for your filesystem, and someone tries to resave that file, you've just completely broken your entire filesystem. Oooh, that hurts.

This is where the jounalling comes in. With a jounalled filesystem, every operation is written down ahead of time in the journal, then the operation is performed, then it's deleted from the journal. In the event of a failure such as described in the last paragraph, when the filesystem comes back up, it will see that there are operations in the journal, and can "roll-back" those operations as if they never happened, hence the filesystem returns to it's last known good state. This ensure that each modification to the filesystem is 'atomic', or only happens when everything is absolutely complete. Even though some of those operations have been completed, the journal is housing all of the not yet complete operations currently being carried out, so when it returns it can just see what was being done and return to what it was before the partially complete operation. Although this isn't totally fool-proof, it certainly is miles ahead of the alternative. This reduces the possibility of a complete data loss failure only to events in which the journal is being written and becomes corrupted, and when the filesystem is also being written and becomes corrupted, and when the Metadata is also being written and becomes corrupted. The likelihood of all three of these things happening is reasonably unlikely where all three things get broken.

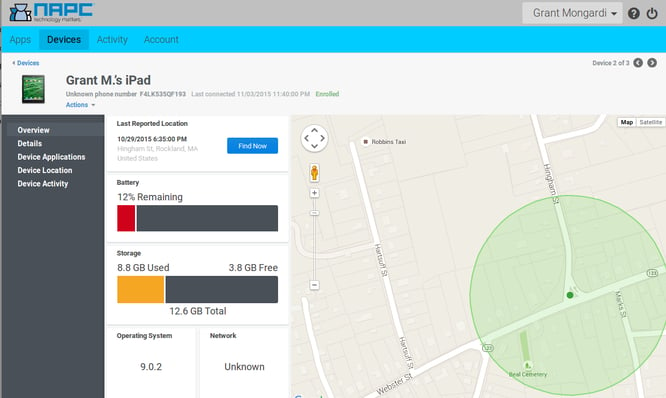

I live in a "reasonably unlikely" world. What do I do?

Regardless of whether you're a lucky person or not, you should always have a good disaster recovery plan. Not only should you have one, you should schedule regular tests of that plan, or at the very least audit it. NAPC has years and years of experience in this area, and can discuss with you the risks associated with your particular configuration, as well as all of the possible scenarios for disaster recovery in the event of a catastrophic failure. To setup such a discussion, contact your Sales representative, or just send us an email!